Author Mark Waser

Abstract. We constantly hear warnings about super-powerful super-intelligences whose interests, or even indifference, might exterminate humanity. The current reality, however, is that humanity is actually now dominated and whipsawed by unintelligent (and unfeeling) governance and social structures and mechanisms initially… Continue Reading →

Divi has entered the home stretch with our limited beta next week. Since I finally have some breathing room, I thought that I’d share some of what we’ve been doing and where we’re going. One of Divi’s most progressive features… Continue Reading →

In the right hands, can AI be used as a tool to engender empathy? Can algorithms learn what makes us feel more empathic toward people who are different from us? And if so, can they help convince us to act… Continue Reading →

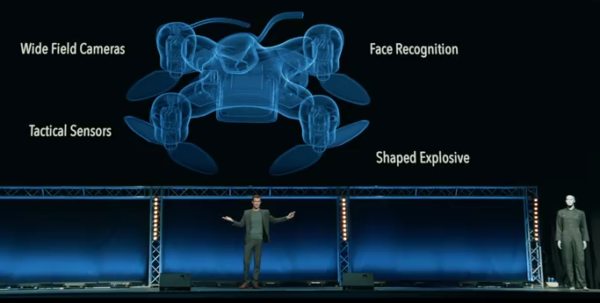

So . . . . the Elon Musk anti-AI hype cycle has started up again. Elon Musk, Warren G, and Nate Dogg: It’s Time to ‘Regulate’ A.I. Like Drugs Worse, we have the Stuart Russell’s movie, Slaughterbots. screen-grab from Slaughterbots Actually,… Continue Reading →

The last six months has seen a rising flood of publicity about “killer robots” and autonomy in weapons systems. On November 19, 2012, Human Rights Watch (HRW) issued a 50-page report “Losing Humanity: The Case against Killer Robots” outlining concerns… Continue Reading →

I admire Eliezer Yudkowsky when he is at his most poetic: our coherent extrapolated volition is our wish if we knew more, thought faster, were more the people we wished we were, had grown up farther together; where the extrapolation… Continue Reading →

Rice’s Theorem (in a nutshell): Unless everything is specified, anything non-trivial (not directly provable from the partial specification you have) can’t be proved AI Implications (in a nutshell): You can have either unbounded learning (Turing-completeness) or provability – but never… Continue Reading →

Some problems are so complex that you have to be highly intelligent and well informed just to be undecided about them. – Laurence J. Peter Numerous stories were in the news last week about the proposed Centre for the Study… Continue Reading →

[Part 1] (originally published December 10, 2012) You’re appearing on the “hottest new game show” Money to Burn. You’ll be playing two rounds of a game theory classic against the host with a typical “Money to Burn” twist. If you… Continue Reading →

Over at Facing the Singularity, Luke Muehlhauser (LukeProg) continues Eliezer Yudkowsky’s theme that Value is Fragile with Value is Complex and Fragile. I completely agree with his last three paragraphs. Since we’ve never decoded an entire human value system, we… Continue Reading →

Maybe I’m missing something, but it looks like everyone is overlooking the obvious when discussing the so-called “hard problem” of consciousness (per Chalmers [1995], the “explanatory gap” of “phenomenal consciousness” or “qualia” or “subjective consciousness” or “conscious experience”). So let’s… Continue Reading →

Recent Comments