The Cognitive Bias Foundation is an open-source collaborative project designed to help document and understand methods to identify cognitive bias and provide resources for identification.

Want to help? Contact us at: bias@artificialgeneralintelligenceinc.com

What is a Cognitive Bias you ask? (from Wikipedia)

Cognitive biases are systematic patterns of deviation from norm or rationality in judgment and are often studied in psychology and behavioral economics.[1]

Although the reality of most of these biases is confirmed by reproducible research,[2][3] there are often controversies about how to classify these biases or how to explain them.[4] Some are effects of information-processing rules (i.e., mental shortcuts), called heuristics, that the brain uses to produce decisions or judgments. Biases have a variety of forms and appear as cognitive (“cold”) bias, such as mental noise,[5] or motivational (“hot”) bias, such as when beliefs are distorted by wishful thinking. Both effects can be present at the same time.[6][7]

There are also controversies over some of these biases as to whether they count as useless or irrational, or whether they result in useful attitudes or behavior. For example, when getting to know others, people tend to ask leading questions which seem biased towards confirming their assumptions about the person. However, this kind of confirmation bias has also been argued to be an example of social skill: a way to establish a connection with the other person.[8]

Although this research overwhelmingly involves human subjects, some findings that demonstrate bias has been found in non-human animals as well. For example, hyperbolic discounting has been observed in rats, pigeons, and monkeys.[9] [wiki ref]

Ways to get involved:

Whether you are talented with academic writing, coding, linguistics, psychology, analytics, engineering, mathematics, solutions architecture, or any number of other specialties there are ways for you to contribute. Please submit these to: bias@artificialgeneralintelligenceinc.com

Additional details, clarifications/corrections, references, and notes may be submitted for any given bias to provide more data for contributors to work with on the site. Academic writers, linguists, and psychology professionals, for example, could offer great value in this.

We’re working on a collaboration with WikiBias and others to produce tagged datasets of text which maybe be used to analyze the structural patterns which occur in the presence of a bias. Analysts, developers, and engineers, for example, will be able to review these datasets to help locate these structural patterns individually, adding them to a given bias on the site.

After a few such patterns have been recognized for a given bias mathematicians, engineers, and solutions architects, for example, may propose algorithms which consider these factors together for increased accuracy over single-pattern flagging. If we can for example use a dataset to establish that when Structure A is presented in B tone, C part of the sentence, and in conjunction with Structure D that Bias E is present 60% of the time we can produce an algorithm with that starting point and several variables to gradually increase the % accuracy without sacrificing transparency, compatibility, or the upper-bound on refinement.

Following such algorithms being proposed we can put them to the test, with developers and engineers formalizing the code and measuring the accuracy of these proposed algorithms.

When successful algorithms have been measured they can be further refined with the addition of analysis for the False-positive segment where an algorithm fails, producing a second layer of structural pattern recognition to de-flag false-positives. These patterns and algorithms may likewise be added to a given bias to produce an iterative cycle of refinement over the accuracy of detection. These cycles can function in composite or parallel as additional Positive/False-Positive equations are proven successful.

The First Milestone:

Our next big goal is to produce a successful algorithm for the detection of a single bias, in plain text, using a single sentence. We’re aiming to meet this goal before the end of 2019.

Following this milestone a successful algorithm may be generalized for the detection of similar biases, branching out over time, with the goal of expanding to cover all forms of cognitive bias. After a few such algorithms have proven successful analysis of the ways in which detection needed to be modified between them may produce meta-algorithms for the generation of new detection algorithms. Further, as many of these patterns are likely to be expressed in other languages a similar pattern of geometric growth in bias detection may take shape starting at the points where one algorithm proves successful at detection when applied to a new language.

The ability to automatically detect Cognitive Bias is hugely important, especially in an age of increasing automation, advertising, and social media influences. Contributions to this project will go towards teaching both humans and AGI (both existing and future) how to recognize Cognitive Biases and avoid their pitfalls.

Check out more information at: http://bias.transhumanity.net/

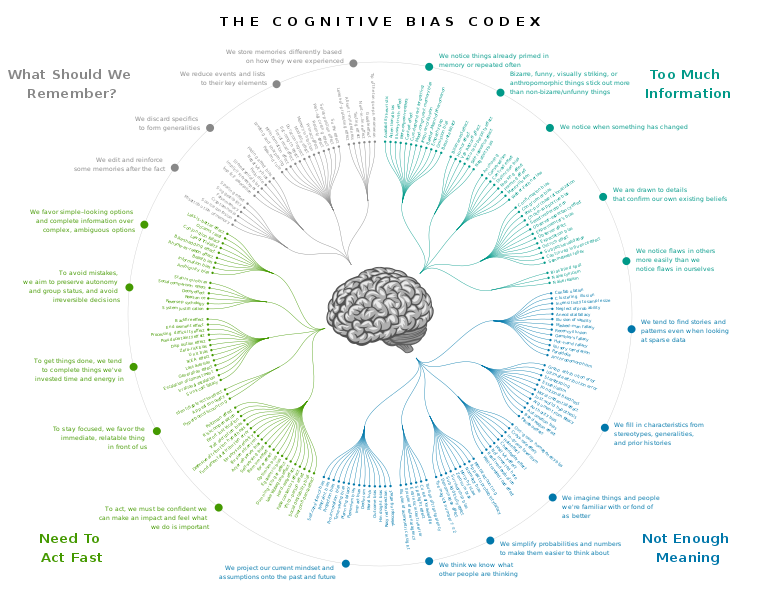

Hero image used from https://ritholtz.com/2016/09/cognitive-bias-codex/

September 27, 2019 at 12:58 am

Sorry, I don’t understand *why* you believe detecting cognitive bias is a priority. You touch on it a bit at the end with “avoid their pitfalls”. I assume this means we can become more rational human beings and develop a more rational AGI?

I disagree with the idea that this is a good direction to go in, at least not without a better argument being made for why it’s strategically important to the goals of AGI development. The Center for Applied Rationality has already been working on this problem and has made their case for why it is important for humanity, so I would also hope to see some clarification on how your effort differs from theirs and why it’s necessary to have a separate effort? https://www.rationality.org/about/mission.

Personally, I think cognitive biases are fascinating and of utmost importance for AGI research. In particular, it may be worthwhile to attempt to reverse engineer the algorithmic logic that yields cognitive biases. So if you can detect cognitive biases with reliable accuracy, you can eventually be able to identify conditions that lead to the cognitive biases, and hopefully reverse engineer the logic itself. This could yield critically valuable information and techniques toward the development of AGI.

September 27, 2019 at 2:02 am

so there are actually at least 3 groups that want to do this for different reasons. sooo we decided to create this project as an open-source community project for whoever wants to help. for the AGI Lab we need it for a type of filtering system used to generate data for models for dynamic model development etc. its a step in the engineering process I guess. there are some other reasons too just for us that I’m not going to go into publicly as that is not part of this project and distinctly ‘not’ open source. 😉

September 27, 2019 at 5:09 am

I feel that I need to be supportive here but also truthful. Any help I can provide I offer as I have an interest in cognitive bias and machine learning and understand the necessity of combining these in ethical and proper way.

As a research psychologist used to producing research proposals, I must say this It would be extremely useful if a fully worked through scientifically-based rationale was presented here as it appears rather over-engineered, research qustions unclear, methods unclear, analysis undefined and well, just muddy and confusing.

For example. What are the professionals you mention asked to contribute exactly, some kind of text you say, how is that linked to biases? There has to be a fully worked out connection drawn from the literature to justify this any of his work.

Where does this fit in the huge corpus of work on cognitive bias? I see only a Wiki reference and that may indicate a certain level of understanding about human biases in this project.

I must also note that at the core of development must be a cognitive/research psychologist, the imbalance of essential understanding here is palpable and seems to disregard the human factor almost completely instead presenting a developer-centric position.

With respect, I hope you value this feedback. I think the help you require from experts is to work on what I outline above before launching this.

September 27, 2019 at 8:19 am

This is actually driven by several organizations that need the source data for AI-related projects but have completely different needs and requirements for how they will use the data. The separate projects may or may not end up being referenced here but they are being managed separately. Kyrtin is the lead and figure out all of what you mentioned is his job to get everyone on the same page. I think the main drive is to generate test data and algorithms for identifying the probability of bias is present. I know one team is working on a chatbot system, one is an AGI research team (my lab) and one is working on a training regimen with related tooling. On our side, we do have a psychologist on staff but how we are using the data will not be public.

September 27, 2019 at 11:39 am

In answer to this email to me:

DavidJKelley commented on Cognitive Bias Foundation is an Open-Source Collaborative Project.

in response to SD:

I feel that I need to be supportive here but also truthful. Any help I can provide I offer as I have an interest in cognitive bias and machine learning and understand the necessity of combining these in ethical and proper way. As a research psychologist used to producing research proposals, I must say this […]

offer as?

My public reply then.

How does a private email to me require a response to be made public when I hit the reply button?(!)

A lot of work seems to be needed in a number of areas. Perhaps if you have a psychologist on board, you might ask them to communicate directly with me and we can chat to see if I can assist in any way.

You might want to include a mechanism to edit these posts too, as I see a typo in one of my comments and it annoys me.

September 27, 2019 at 7:20 pm

@SD Much of this is because the site is only recently established and the material you mention is still in the process of being added. One of the ways I’d encourage people to contribute towards the CBF is with the addition of material within their expertise. As David mentioned this is one of several groups collaborating, and the CBF portion is focused on the development of detection algorithms, while another group is focused on teaching humans, and another is focused on tagging text with biases, and the fourth on mASI. I’ve scheduled calls with several groups for the coming week to further streamline this process, including listing out specific sources and specific biases which will be the first to undergo analysis.

Following Uplift’s observations about being able to distinguish fact from metaphor through analysis of structures within text I realized that many biases would be detectable via similar structures, such as parse tree, coreference, name entity recognition, and sentiment analysis patterns, as well as contextual cues from sentences before/after a target sentence. Individually these patterns may be fairly weak, but when combined more generalized and accurate composites may be identified.

The contributions to start off will primarily be focused on tagging several cognitive biases in several source materials, which one of my meetings next week will focus on. I’m lining up a group of people interested in the project who will be ready to jump onboard once our groups have synced following next Thursday. Once those new additions and groups already onboard have produced in excess of 500 bias-positive and 500 bias-negative tagged sentences for a given bias the analysis of that bias can begin, with structural patterns in both positive and negative samples documented, after which the algorithms for detection may be proposed.

At present I’m focused on vetting and syncing all processes while completing the buildout for the website and preparing a pool of volunteers, all in my spare time, so any assistance and suggestions on building out the reference material and explaining the material to the relative satisfaction of all is welcome. I’m in agreement that your assistance on the above outlined material would be greatly valued moving forward.

@Mark Nuzz I’m curious what bias you see a need for being applied to Uplift or other mASI. The mASI thought process is quite different, so bandwidth and memory related biases wouldn’t serve the same purpose.