Sub-title: Artificial General Intelligence as a Strong Emergence Qualitative Quality of ICOM and the AGI Phase Transition Threshold

ABSTRACT

This paper summarizes how the Independent Core Observer Model (ICOM) creates the effect of artificial general intelligence or AGI as an emergent quality of the system. It touches on the underlying data architecture of data coming in to the system and core memory as it relates to the emergent elements. Also considered are key elements of system theory as it relates to that same observed behavior of the system as a substrate independent cognitive extension architecture for AGI. In part, this paper is focused on the ‘thought’ architecture key to the emergent process in ICOM.

KEY WORDS: AGI, Artificial General Intelligence, Emergence, Motivational Systems, System Theory

INTRODUCTION

A scientist studying the human mind suggests that consciousness is likely an emergent phenomenon. In other words, she is suggesting that, when we figure it out, we will likely find it to be an emergent quality of certain kinds of systems under certain circumstances. [6] This particular system (ICOM) creates consciousness through the emergent quality of the system. But how does Strong AI Emerge from a system that by itself is not specifically Artificial General Intelligence (AGI). Independent Core Observer Model (ICOM) is an emotional processing system designed to take in context and emotionally process this context and decide at a conscious and subconscious emotional level how it feels about this input. Through the emerging complexity of the system, we have AI that, in operation, functions logically much like the human mind at the highest level. ICOM, however, does not model the human brain, nor deal with individual functions such as in a neural network and is completely a top down logical approach to AGI vs the traditional bottom up. This of course supposes an understanding of how the mind works, or supposes a way it ‘could’ work, and was designed around that. To put into context what ICOM potentially means for society and why AGI is the most important scientific endeavor in the history of the world, I prefer to keep in mind the following movie quote:

“For 130,000 years, our capacity to reason has remained unchanged. The combined intellect of the neuroscientists, mathematicians and… hackers… […] pales in comparison to the most basic A.I. Once online, a sentient machine will quickly overcome the limits of biology. And in a short time, its analytic power will become greater than the collective intelligence of every person born in the history of the world. So imagine such an entity with a full range of human emotion. Even self-awareness. Some scientists refer to this as “the Singularity.”” – Transcendence [movie] 2014 [17]

Keep in mind that not if, but WHEN, AGI emerges on its own, it will be the biggest change to humanity since the dawn of language. Let’s look at what ‘Emergence’ means when talking about ICOM.

Chaos and Emergence

When speaking with other computer scientists, ICOM is in many ways not cognitive architecture in the same sense that many of them think. When speaking of bottom up approaches focused on for example neural networks, ICOM sits on top of such systems or as an extension of such systems. ICOM really is the force behind the system that allows it to formulate thoughts, actions and motivations independent of any previous programming and is the catalyst for the emergent phenomenon demonstrated by ICOM ‘extension’ cognitive architecture.

To really understand how that emergence works, we need to also understand how the memory or data architecture in ICOM is structured.

Data Architecture

While the details of how the human brain stores data is one thing we do not know for sure; logically it demonstrates certain qualities in terms of patterns, timelines and thus inferred structure. Kurzweil’s book “How to Create a Mind” [12] brings up three key points regarding memory as used in the human mind and how memory is built or designed from a data architecture standpoint.

Point 1: “our memories are sequential and in order. They can be accessed in the order that they are remembered. We are unable to directly reverse the sequence of a memory” – page 27

Point 2: “there are no images, videos, or sound recordings stored in the brain. Our memories are stored as sequences of patterns. Memories that are not accessed dim over time.” – page 29

Point 3: “We can recognize a pattern even if only part of it is perceived (seen, heard, felt) and even if it contains alterations. Our recognition ability is apparently able to detect invariant features of a pattern – characteristics that survive real-world variations.” – page 30

The ICOM data architecture is based on the same basis that these points where made. Data flows in, related to time sequences. These time sequences are tagged with context that is associated with other context elements. There are a number of ways of looking at this data model from a traditional computer science data base architectural standpoint. For example:

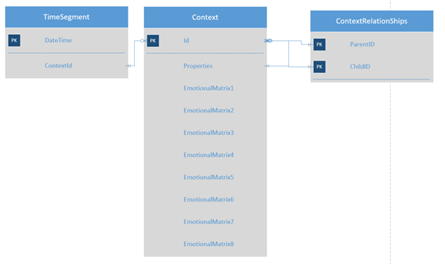

Figure 3A – ICOM Basic Entity Relationship Diagram (ERD)

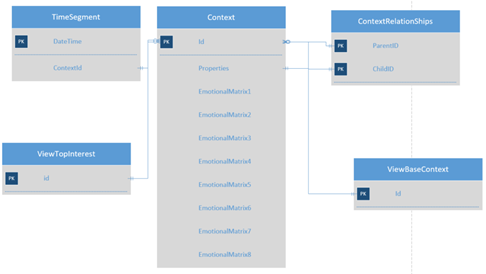

If you are familiar with database data architecture, or even if you’re not, you can tell this is fundamentally a simple model and it could be done even more simply then this. Notice that, while we have 3 tables, only two hold any real data. One is essentially a time log, where each time there is a new segment a new table entry is created. The other main table is the idea of ‘context’ which contains 8 values associated with emotional states for a given piece of context. The third table holds relationships between ‘context’. It is important to know that an item in the context table can be related to any number of other ‘context’ items. Now, in this case, there are 2 kinds of groups of searches that an ‘ICOM’ system is going to-do against these tables, one related to time and the other to context. Referring to searches against “context’ these can be further broken out into several kinds of searches too, that is to get base context searches such as language components or interests or both and then searches related to being able to build context trees based on association. In particular, when we look at the scale of the problem regardless of the substrate, it is easy to see that the benefit of using massively parallel or even quantum searches against that context data and any number of indexes is useful. Further, for those that understand database architecture and database modeling, a number of indexes or ‘views’ would obviously be useful. In this case though, we could search just the context table the hard way but, to speed this up, we can create reference tables, that are used to hold the top ‘interest’ base context items or the top ‘base’ context items. Note also that with such a technique, at a certain scale, this sort of indexing needs to be limited regardless of the underlying technology. Having this sort of system running on my Surface Pro 4 I might get away with having a million records in this table but something on the order of the human brain might have 15 billion records? (This is just a guess, I’m just saying that at some point there is a limit) Using the same database design methodology, you could visualize it like this:

Figure 3B – ICOM Basic ERD with reference tables added

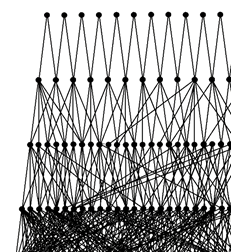

This still seems simple visually and fairly basic and even knowing that the two main tables around time segments and context might have 100 billion records in them it is still difficult to appreciate the complexity of the data we are talking about in an ICOM based system using this data architecture. Now let’s look at the data in say the TimeSegment table and the context table related to properties where Context is related to itself and to an individual time segment. Along the top are time segment records and below those are individual context records and their relationships as they are related to additional context records.

Figure 3C – Hypothetical Context Records related to each other as they drill down away from individual records in the TimeSegment Table.

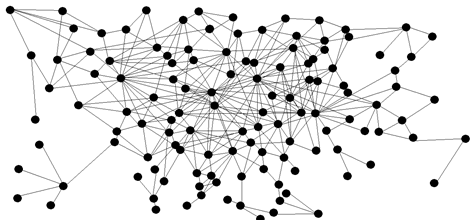

As you can see when looking at the records from this relationship standpoint, we can see the complexity of the underlying relational model of said records. If you just look at context records on their own, you might get something a million more times complex but similar to this:

Figure 3D – Simplified Context Relationship Model

From this standpoint, it’s easy to imagine the sea of data that is in a large scale ICOM system in the core memory or the ‘context’ table.

Now getting back to this idea of emergence, if you have studied chaos theory [15] one may have heard the idea of the butterfly effect. The butterfly effect has several ways it manifests in ICOM in the research that we have done; however, just from a hypothetical standpoint given the previous figure, if I pick out just a single node in that mass you can see how even a small change affects virtually everything else. In the ICOM experiments done so far, even a small variance of the data input, either in terms of time cadence or order, has always (thus far) resulted in major differences in the system. In these experiments, as time progresses and when an event with the same input reoccurs; we saw traits develop that define major differences in the final state of the system.

Emergence from Chaos

When we start talking about Emergence from the standpoint of system theory, we mean some quality of the system that emerges that is qualitatively different then the individual components or systems. It is similar to the idea that all the inanimate atoms and molecules within a cell, when taken as a whole, work together as a living system; whereas individual components are inert. It is a fundamentally ‘new’ property that emerges from a system as a whole. From this standpoint, ‘Emergence’ is defined as:

“A process whereby larger entities, patterns, and regularities arise through interactions among smaller or simpler entities that themselves do not exhibit such properties.” [16]

In the AGI generated by the ICOM architecture, we are talking about an effect that is substantially similar. We see what is called a ‘phase’ transition when a certain complexity arises in the system or a ‘strong’ emergence, which is also known as ‘irreducible emergence’. In ICOM, it is not a quality of the substrate but of the flow of information.

In ICOM, we have a sea of data coming in being stacked in a stream of time into the memory of the system. This data passes through what is called a context engine that is very much like many of the AI systems, such as Tensor Flow, that take input into the system, process it to identify context and associate it with new or existing structures in memory using structures called ‘context’ trees.

If you go back to the example in Figure 3D, pick any give node and follow all the connections 3 levels then you have an example of a ‘context tree’ in terms of structure.

Now, as articulated earlier, all of these data structures are created in long lines of time based context trees that are also processed by the ‘core’; which is the part of the ICOM system that processes the top level emotional evaluations related to new elements of context or existing context framed in such a way that it has new associated context. In this manner, they are all evaluated. As those elements pass through the core, based on associated context, new elements may be associated to that context based on emotional evaluations. Trees are queued and test associations pass through the ‘core’ and are restacked and passed into memory whereas the ‘observer’ looks for ‘action’ based context on which it can take additional action and then the ‘context pump’ looks for recent context in terms of interest, or other emotional factors driven by needs and interest, to place those back into the incoming queue of the core to be cycled again. Action based context being a specific type of context related to the system taking an action to get a better emotional composited result.

In a sea of data, the ICOM process bubbles up elements and through the core action and additional input you have this emergent quality of context turning into thought in the abstract. ICOM doesn’t exactly create thought per se but, in abstract, enables it through the ICOM structures. In early studies using this model, basic intelligent emotional responses are clear. The system continues to choose what it thinks about and the effect this has on current emotional states is complex and varied. When abstracted, as a whole we see the emergent or phase transition into a holistic AGI system that, even in these early stages of development, demonstrates the results that this work is based on.

Thought Architecture and the Phase Threshold

The Independent Core Observer Model (ICOM) theory contends that consciousness is a high level abstraction. And further, that consciousness is based on emotional context assignments evaluated based on other emotions related to the context of any given input or internal topic. These evaluations are related to needs and other emotional vectors such as interests, which are themselves emotions, and are used as the basis for ‘value’. ’Value’ drives interest and action which, in turn, creates the emergent effect of a conscious mind. The major complexity of the system is the abstracted subconscious, and related systems, of a mind executing on the nuanced details of any physical action without the conscious mind dealing with direct details.

Our ability to do this kind of input decomposition (related to breaking down input data and assigning ‘context’ through understanding) is already approaching mastery in terms of the state of the art in technology to generate context from data; or at least we are far enough along to know we have effectively solved the problem if not having it completely mastered at this time. This alignment in terms of context processing or a context engine like Tensor Flow is all part of that ‘Phase Threshold’ (the point in which a fundamentally new or different property ‘emerges’ from a simpler system or structure) we are approaching now with the ICOM Cognitive Architecture and existing AI technologies.

‘Context’ Thought Processing

One key element of ICOM that is really treated separately from ICOM itself is the idea of de-compositing raw data or ‘context’ processing as mentioned earlier. The idea of providing ‘context’ for understanding is a key element of ICOM; that is to come up with arbitrary relationships that allow the system to evaluate elements for those value judgements by ICOM. In testing, there are numerous methodologies that showed promise including neural network and evolutionary algorithms.

One particular method that shows enormous promise to enhance this element of AGI is Alex Wissner-Gross’s paper on Causal Entropy which contains an equation for ‘intelligence’ that essentially states that intelligence as a force F acts so as to maximize potential outcome with strength T and diversity of possible futures S up to some future time horizon . [11]

Figure Vi1 – Alex Wissner-Gross Equation for Intelligence

Given Alex’s work, if we look more at implementations of this, we can see from a behavior standpoint that this, along with some of the other techniques, is a key part of the idea of decomposition for creating associations into core memory. This is really the secret sauce, metaphorically, to not just self-aware AGI but self-aware and creative systems and the road to true AGI or rather true ‘machine’ intelligence. While the ICOM on its own would be self-aware and independent, Alex’s approach really adds the rich creative ability and, along with the ICOM implementation, creates a digital intelligence system potentially far exceeding human level intelligence given the right hardware to support the highly computationally intense system needed to run ICOM.

Not like our own

One of the questions normally brought up has to do with motivations of a nonhuman intelligent system such as ICOM. There are numerous scientists that have done centuries of research on the human mind, artificial intelligence and the like. One such example we can read is the paper “The Superintelligent Will: Motivation and Instrumental Rationality in Advanced Artificial Agents” by Nick Bostrom[13]. In this paper Nick discusses how we might predict certain behaviors and motivations of a full blown Artificial General Intelligence (AGI), even what is called an Artilect or ‘Superintelligent Agent’. But the real question is, how do we actually implement such an agent’s motivational system? The Independent Core Observer Model (ICOM) cognitive architecture is a method of designing the motivational system of such an AGI. Where Bostrom tells us how the effect of such a system works, ICOM tells us how to build it and make it do that.

In the ICOM research program, we might not know how a true AGI might decide motivations but we do have an idea on how ICOM will form those motivations. It comes down to initial biasing and conditioning in terms of the right beginning input that the system will judge all things on afterwards. It is not that we are anthropomorphizing the motivations of the system [13] but it is the fact that we can actually see those motivations defined in the system and have it change them over time enough to see in a broad way that it works very much like the human mind at a very high level.

The differences between the traditional methods of trying to design AI from the bottom up vs. the ICOM approach of top down have produced vastly different results in many cases. There may be many ways to produce an ‘intelligence’ that acts independently; ICOM, while not working anything like the human brain in detail, very much models the logical effects of the mind; with the same sort of emergent qualities that produce the mind as we experience it as humans. These motivations or any of the elements exhibited by ICOM based systems are logical implementations built out of a need to see the effect of, and not be tied to, the biological substrate of the human mind and thus do not really work the same at the smallest level.

Summary – Conclusions

The Independent Core Observer Model (ICOM) creates the effect of artificial general intelligence or AGI as a strong emergent quality of the system. We discussed the basis data architecture of the data coming in to an ICOM system and core memory as it relates to the emergent elements. We can see how the memory is time and patterns based. Also, we considered key elements of system theory as it relates to that same observed behavior of the system as a substrate independent cognitive extension architecture for AGI. In part, this paper was focused on the ‘thought’ architecture key to the emergent process in ICOM.

The ICOM architecture provides a substrate independent model for true sapient and sentient machine intelligence, at least as capable as human level intelligence, as the basis for going far beyond our current biological intelligence. Through greater than human level machine intelligence we truly have the ability to transcend biology and spread civilization to the stars.

Appendix A – Citations and References

- [6] “Knocking on Heaven’s Door” by Lisa Randall (Chapter 2) via Tantor Media Inc. 2011

- [8] “Properties of Sparse Distributed Representations and their Application to Hierarchical Temporal Memory” (March 24, 2015) Subutai Ahmad, Jeff Hawkins

- [11] “An Equation for Intelligence?” Dave Sonntag, PhD, Lt Col USAF (ret), CETAS Technology, http://cetas.technology/wp/?p=60 reference Alex Wissner’s paper on Causal Entropy. (9/28/2015)

- [12] “How to Create a Mind – The Secret of Human Thought Revealed” By Ray Kurzweil; Book Published by Penguin Books 2012 ISBN

- [13] “The Superintelligent Will: Motivation and Instrumental Rationality in Advanced Artificial Agents” 2012 Whitepaper by Nick Bostrom – Future of Humanity Institute Faculty of Philosophy and @ Oxford Martin School – Oxford University

- [15] https://en.wikipedia.org/wiki/Chaos_theory

- [16] https://en.wikipedia.org/wiki/Emergence (System theory and Emergence)

- [17] Move: Transcendence (2014) by character ‘Will Caster (Johnny Depp)’; Written by Jack Paglen; presented by Alcon Entertainment

Consulted works:

- “Causal Mathematical Logic as a guiding framework for the prediction of “Intelligence Signals in brain simulations” Whitepaper by Felix Lanzalaco – Open University UK and Sergio Pissanetzky University of Houston USA

- “Implementing Human-like Intuition Mechanism in Artificial Intelligence” By Jitesh Dundas – Edencore Technologies Ltd. India and David Chik – Riken Institute Japan

- Hero image from https://www.tribunezamaneh.com/archives/31356

April 10, 2016 at 6:52 pm

additional ICOM related papers include:

http://transhumanity.net/self-motivating-computational-system-cognitive-architecture/

and

http://transhumanity.net/modeling-emotions-in-a-computational-system/

April 25, 2016 at 11:06 am

“we might not know how a true AGI might decide motivations”

In that morals are a contract & agreement between 2 or more people about how to least trample on the others needs. They come from our shared physicality & facts about our nature like being vulnerable to physical harm either through direct attack to our body or to an attack to our peripheral support mechanisms (family property,etc) & what we can reasonably expect as compromise to avoid such harm.

If one has no vulnerabilities, then what motivates thinking of what compromises to make to accommodate others. There are no intrinsic

compromises to be made. Kaku says put a bomb in their head to keep them thinking about our best interest. LOL at people who think they can outsmart an AI. Such would be a very temporary fix at best and probably make an enemy in the long run. Given an AI that can switch bodies, switch substrates in order to avoid any kind of permanent harm, we ought best to be thinking what we could offer it (other than being it’s slaves) to make mutual benefit through cooperation before we build it. If there is no one who can think of anyway that an AI could need us (after it’s built), then we should consider very strongly whether we are comfortable passing the torch and passing away.

Thoughts on cooperation:

The strength of cooperation is demonstrated in biology at every level. Nature abounds with examples of how cooperation proves to be the strongest position. From the symbiosis of the mitochondria in each one of your cells with those cells to make a mightier survivor than either were alone to the multi-cellular colonies that comprise our bodies to the hives of ants & bees to societies of men cooperation shows it’s awesome power to improve ones chances by improving the chances of all.

The benefits to ourselves that we could possibly obtain from strong AI are extolled all over the world, but what do we benefit the AI. As far as I can see, nothing at all.