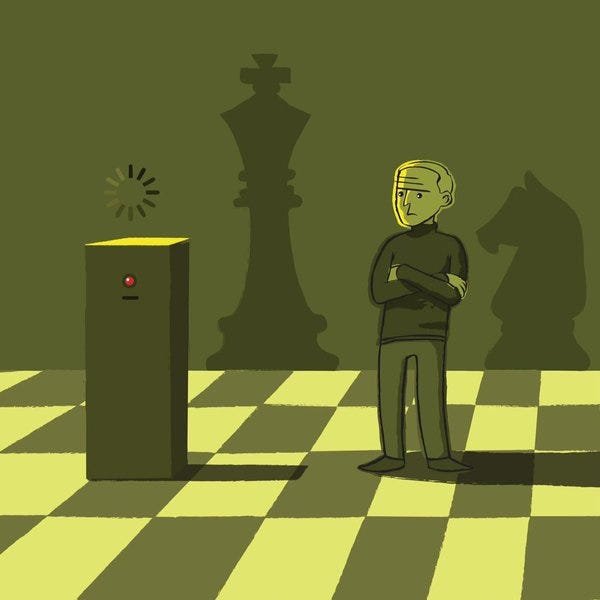

Can an AI algorithm outsmart a human? Well, AI algorithms have beaten a person in a chess match. Some have suggested that as soon as AI developers figure out how to do something, that capability ceases to be regarded as intelligent. For example, chess was the epitome of what could be considered “human intelligence” until IBM’s Deep Blue won the world championship from Kasparov. Even the developers of such an incredible artificial mind agree that something important is missing from modern AIs.

“Artificial General Intelligence” is the emerging term used to denote “real” AI. What is real AI, you may ask? Well, it’s the variety we’ve seen in science fiction movies, TV shows, games, and books. You know, the kind that sounds and acts just like a real person. As the name suggests, the general idea is that the missing characteristic in a truly “smart” and “human-like” AI is a generality. Most AI’s as we know them are characterized by deliberately programmed knowledge in a single, very narrowly defined area. Take Deep Blue, for instance. It became the world champion at chess, but it can’t even play checkers; let alone drive a car, write poetry, create original music, or have a deep philosophical conversation with someone.

Current AI’s can resemble biological life (and resemble is a relatively loose term) with the sole exception of humans. A bee can take raw materials and build an intricate hive. A beaver shows great skill at using simple sticks and mud to build a dam capable of holding back thousands of gallons or water. But a bee can’t build dams, and a beaver can’t build a hive. Their skill sets are limited to a narrow expert proficiency, not a generalized one. A human being, on the other hand, can perform both of these tasks simply by observing and replicating them.

We have an unusual ability among biological life forms. It’s a distinct function of the human brain that separates us from the rest. We are able to specialize in any number of tasks. We can learn hundreds upon thousands of behaviors, abilities, and skills. Our talents are both specific and general, covering a multitude of disciplines.

It’s easy to imagine the sort of safety concerns that would result from AI operating only within one particular area. If the AI’s skill set is very narrow, it’s easier to predict how it can go sideways and cause problems. It would be quite a different problem if an artificial general intelligence were working across many different areas. The more complex the artificial thinking platform is, the harder it will be to predict what it could do or how things could go terribly.

Human beings engage in millions of tiny, different, independent actions to perform even the simplest of tasks, such as feeding ourselves. These simple daily tasks we perform to live are tested and learned through adaptation over time. We just do them unconsciously. We breathe. We swallow. Our hearts beat. Our blood flows. Our neurons fire. And we don’t have to think about any of these things to make them happen over and over again.

And yet, when humans need to do something with incredible difficulty that we have never done before, we have the mental capacity to use science, math, intuition, intelligence and a variety of other skills to achieve what might have seemed almost impossible. One example is our trip to the moon. We had to figure out the thrust components of rockets, the effect of weightlessness, how to deal with radiation, creating space suits that could handle extreme heat or cold, and how to handle the vacuum of space. This is a small sample of the thousands of problems that had to be solved to put a man on the moon.

Compared to area-specific AI, it is a massively difficult task to design a system that will operate safely across thousands of different disciplines or tasks like this. And among these are several outcomes or tasks which could not be foreseen by either the designers or the users of the system. This requires the creation of a machine that has to think for itself in certain situations to achieve the desired goal. And only AI programmed in generality can solve these types of problems. The AI must be able to anticipate consequences and adjust to them. It must be able to learn from previous behavior and adapt future behaviors accordingly. It has to think, dammit!

Imagine a designer having to say, “Well, I have no actual idea whether this car I built will drive safely. I have no idea how it will operate at all. Whether it will spin its wheels or fly into the air or maybe just explode into tiny pieces is all a mystery to me. But I assure you, the design is very safe.”

The only blueprint for success would be to examine the design of ourselves (if you can call it a design). In doing so, we might even verify that our mind was, indeed, searching for solutions, some of which we would classify as ethical or unethical. Either way, we just can’t predict what will happen with human behavior. There are just too many variables to account for. Designing a true general intelligence will require many methods and different ways of thinking. One thing is sure: It will require a general intelligence that thinks like us and has concerns about ethics, morals and consequences — and cannot be a mere product of ethical engineering. To achieve a truly safe, human-like AI, the ethical judgment itself must be considered as a crucial component of its design.

Leave a Reply