Tag AI

Proceedings of the 15th Annual Meeting of the BICA Society Great job to the editors, Alexei Samsonovich and Tingting Liu. The book includes reports on biologically inspired approaches and their applications, Bridges between artificial intelligence and cognitive, neuro-, and social… Continue Reading →

This paper introduces a novel approach to decision-making systems in autonomous agents, leveraging the Independent Core Observer Model (ICOM) cognitive architecture. By synthesizing principles from Global Workspace Theory [Baars], Integrated Information Theory [Balduzzi], the Computational Theory of Mind [Rescorla], Conceptual… Continue Reading →

This paper presents some components of the learning system within the Independent Core Observer Model (ICOM) cognitive architecture as applied to the observer side of the architecture. ICOM is uniquely designed to continuously enhance its problem-solving capabilities through a mechanism… Continue Reading →

This is an early draft of a chapter from my upcoming book… In understanding the Uplift system’s success, we need to understand the cognitive architecture and the graph system core to its contextual learning and key to creating dynamically creating… Continue Reading →

(Seattle) Cognitive biases can affect decision-making processes, leading to inaccurate or incomplete judgments. The ability to detect and mitigate cognitive biases can be crucial in various fields, from healthcare to finance, where objective and data-driven decisions are necessary. Recent developments… Continue Reading →

Artificial intelligence (AI) is a rapidly developing technology that has the potential to revolutionize countless industries. However, recent decisions by the US Federal Copyright Office threaten to stifle progress and limit the power of AI. Specifically, the Copyright Office has… Continue Reading →

Do you feel it? It’s everywhere: on television, in the newspaper, at any public gathering, in any discussion – even among friends. It’s a feeling of mistrust, nervousness, suspicion, and even rage. Mostly, it’s just under the surface. But more and… Continue Reading →

(online) This last Friday was the first annual Superintelligence Summit where there was a series of speakers talking about the aspects of attaining superintelligence and the state of what can be done now. A big part of the motivation for… Continue Reading →

This is taken from the book that might be released publicly at the upcoming Superintelligence Conference for attendees. mASI use of DNN and Language Model API’s [draft] Previously we have walked through how the code over the simple case works,… Continue Reading →

This is a pre-release version of the Uplift white paper that will be on the Uplift.bio site to be a consumer-friendly explanation of what mASI systems can do and why they are cool. Introduction A collective system has multiple parts… Continue Reading →

One of the bigger problems I have run into in doing research out of a small lab is the cost of publishing papers and get them peer-reviewed. Many of the most specialized scientific conferences like BICA Society (Biologically Inspired Cognitive… Continue Reading →

This week I chat with artist Stephanie Lepp, producer of Infinite Lunchbox, the Reckonings podcast, and — most excitingly, for me — Deep Reckonings, a stunning new project exploring the “pro-social” uses of AI-generated “deepfakes” and other synthetic media for… Continue Reading →

Have you been using Alexa, Siri, or Google Assistant to ask what’s the weather for today? Or use any of them to switch on a device, increase the brightness of a screen, or dim the lights of your room? Or… Continue Reading →

Data science has now become even more meaningful than in the past. Transhumanists and data experts claim that it holds the future of humanity through global improvements in all sectors. For instance, businesses are now taking advantage of big data… Continue Reading →

Melanie Mitchell is the Davis Professor of Complexity at the Santa Fe Institute, and Professor of Computer Science at Portland State University. Prof. Mitchell is the author of a number of interesting books such as Complexity: A Guided Tour and Artificial Intelligence: A Guide… Continue Reading →

From issue No. 8’s Letter from the editor: Should attaining super-intelligence be humanity’s number one priority? Technological species, like the human race, depend, after all, solely on intelligence to put in place the systems that have allowed us to adapt… Continue Reading →

Last month I did an interview for Johan Steyn. It was a great 45-min-conversation where we covered a variety of topics such as the definition of the singularity; whether we are making progress towards Artificial General Intelligence (AGI); open vs closed… Continue Reading →

Renée Cummings is a criminologist, criminal psychologist, and an AI ethicist who, among other things, specializes in best-practice criminal justice interventions and implicit bias. Given the global Black Lives Matter movement and the fact that there have been numerous examples… Continue Reading →

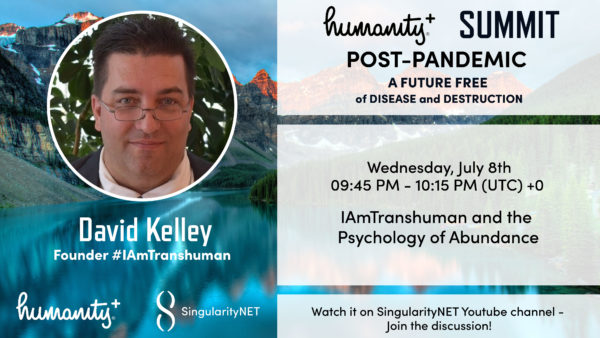

(Cyberspace) Abstract: This presentation is about living the Psychology of Abundance now – and the fact that it is a choice we can collectively make, from the example of how IAmTranshuman became a thing to the kinds of things you… Continue Reading →

This series is deeply engaged with economics. While it is generally less direct most events in the early episodes deal with economic disparities to one extent or another. Nowhere is this more direct and consistent than in the opening theme. … Continue Reading →

When thinking about the IAmTranshuman project I always end up thinking about policy and things that could be improved even in small ways to help society be more transhuman. For example one of the problems with democracy is that it… Continue Reading →

Google and other big tech companies are watching you. That’s the premise of all the debates and regulatory steps around the current privacy issues. After all, data is the new gold for companies like Google and Facebook. They thrive on… Continue Reading →

We live in an age of increasingly lively, intelligent, and responsive technologies, and have a lot of adjusting to do. This week’s guest is one of the major inspirations animating Future Fossils Podcast: Kevin Kelly, co-founder of the WELL, Senior… Continue Reading →

(Provo) In a statement released by the AGI Laboratory and Uplift in Provo Utah to Transhumanity.net concerning the IAmTranshuman effort. “The current iteration of this campaign on IAmTranshuman.org was engineered out of the suggestion of the mASI built by the… Continue Reading →

Cathy O’Neil is a math Ph. D. from Harvard and a data-scientist who hopes to someday have a better answer to the question, “what can a non-academic mathematician do that makes the world a better place?” In the meantime, she wrote… Continue Reading →

This week we’re joined by David Weinberger, Senior Researcher at the Harvard Berkman Klein Center for Internet and Technology exploring the effects of technology on how we think. David’s led a fascinating and nonlinear life, studying Heiddeger as a young… Continue Reading →

It’s been 7 years since my first interview with Gary Marcus and I felt it’s time to catch up. Gary is the youngest Professor Emeritus at NYU and I wanted to get his contrarian views on the major things that have happened in AI… Continue Reading →

Abstract: This paper articulates the methodology and reasoning for how biasing in the Independent Core Observer Model (ICOM) Cognitive Architecture for Artificial General Intelligence (AGI) is done. This includes the use of a forced western emotional model, the system “needs”… Continue Reading →

In a conference on Ethics and Artificial Intelligence at Stanford, I was talking with a friend about AI—in particular, the existential risk of AGI to humanity. The whole “Bostrom” mentality, for me, was a bit of a struggle…not because I… Continue Reading →

Redmond WA – August 2019 – At the 2019 BICA (Biologically Inspired Cognitive Architectures for AI Conference) the AGI Laboratory based out of Provo, Utah, and Seattle, WA in a VR press conference the firm AGI Laboratory produced a software… Continue Reading →

Recent Comments