Tag MarkWaser

Divi has entered the home stretch with our limited beta next week. Since I finally have some breathing room, I thought that I’d share some of what we’ve been doing and where we’re going. One of Divi’s most progressive features… Continue Reading →

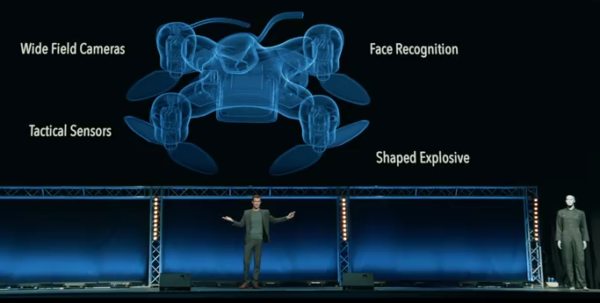

So . . . . the Elon Musk anti-AI hype cycle has started up again. Elon Musk, Warren G, and Nate Dogg: It’s Time to ‘Regulate’ A.I. Like Drugs Worse, we have the Stuart Russell’s movie, Slaughterbots. screen-grab from Slaughterbots Actually,… Continue Reading →

(1 NOV 2017 – Virgina ) – Mark Waser, an AGI Researcher and board member of the TNC (Transhuman National Committee) is featured in a new article by local ABC affiliate in Virgina. “NORFOLK, Va. (WVEC) — From the minute… Continue Reading →

(JULY 2017) – At the AGI Lab in Provo we have a number of people working on different things and between everyone there are a number of great things coming out from a number of them including material related to… Continue Reading →

EFTF and the EFTF Manual is a TNC (Transhuman National Committee) Fundraiser. Get Tickets Here for the Saturday March 11th in Provo, Utah: https://www.eventbrite.com/e/extreme-futures-and-technology-forecasting-work-group-winter-2017-tickets-28855253841 This is a work group that will be an all day workshop on the EFTF book and… Continue Reading →

At the upcoming EFTF being used to raise funds for the TNC and creating a transhuman political lobby in DC we are adding a new feature to the EFTF even that includes creating a book. So that means EFTF Attendee’s… Continue Reading →

Key Nature of AGI Behavior and Behavioral Tuning in the Independent Core Observer Model Cognitive Architecture Based Systems Abstract: This paper reviews the key factors driving the Independent Core Observer Model Cognitive Architecture for Artificial General Intelligence specific to modeling emotions… Continue Reading →

The Official Summary of the TNC Convention for 2016 includes: The new official Board of directors includes: The Executive Committee: Chairperson: David J Kelley Vice Chair: John Warren Technology Chair: Mark Waser Social Media Chair: B.j. Murphy Compliance Chair: Daniel… Continue Reading →

I admire Eliezer Yudkowsky when he is at his most poetic: our coherent extrapolated volition is our wish if we knew more, thought faster, were more the people we wished we were, had grown up farther together; where the extrapolation… Continue Reading →

Rice’s Theorem (in a nutshell): Unless everything is specified, anything non-trivial (not directly provable from the partial specification you have) can’t be proved AI Implications (in a nutshell): You can have either unbounded learning (Turing-completeness) or provability – but never… Continue Reading →

Some problems are so complex that you have to be highly intelligent and well informed just to be undecided about them. – Laurence J. Peter Numerous stories were in the news last week about the proposed Centre for the Study… Continue Reading →

[Part 1] (originally published December 10, 2012) You’re appearing on the “hottest new game show” Money to Burn. You’ll be playing two rounds of a game theory classic against the host with a typical “Money to Burn” twist. If you… Continue Reading →

Over at Facing the Singularity, Luke Muehlhauser (LukeProg) continues Eliezer Yudkowsky’s theme that Value is Fragile with Value is Complex and Fragile. I completely agree with his last three paragraphs. Since we’ve never decoded an entire human value system, we… Continue Reading →

Recent Comments